Community Detection: Difference between revisions

| (51 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

Community (or cluster) detection is procedure by which subnetworks are identified within the overall network. The subnetworks are cliques/clusters/communities of nodes that generally coactivate together. | Community (or cluster) detection is procedure by which subnetworks are identified within the overall network. The subnetworks are cliques/clusters/communities of nodes that generally coactivate together. This page assumes that you have managed to get yourself a n×n adjacency matrix, '''''M''''', through hard work or thievery, and wish to identify the communities/subnetworks/cliques that might be lurking within. | ||

== Making Matrices Symmetrical == | |||

Some algorithms assume bidirectional weights (i.e., w_ij = w_ji), and will be expecting a symmetrical adjacency matrix. If this is not the case, this MATLAB command will set a bidirectional weight between any two nodes in '''M''' to the average of the directional weights: | |||

'''M_symmetrical''' = ('''M''' + '''M'''.')/2 | |||

== Binarizing/Thresholding Weight Matrices == | |||

Regardless of how you compute a connection (correlation, mutual information, neural network), you're generally going to have ''some'' value for each connection (i.e., every node is connected; it's just a matter of degree). The choice of how you decide which weights are ''"real"'' will impact the results of subsequent analyses, and you should be prepared for reviewers to ask you to rationalize your decisions: | |||

=== Statistical Threshold === | |||

====Based on Pearson Correlation Values==== | |||

If you used correlations, you may have a p-value associated with each connection. You may choose to keep all weights with a p-value exceeding some threshold (e.g., p<.0001, corrected for N*N comparisons, so often your threshold will be really really small). This approach is just as simple as it is arbitrary, so you might expect some flak. | |||

====Based on Statistical Distribution Parameters==== | |||

If you have a distribution of values for each weight (e.g., adjacency matrices from multiple individuals, or from multiple models), you might use something like a confidence interval to eliminate connections that have a distribution of weights that cross the zero line in the 95% CI because they are neither positive nor negative with sufficient reliability. Here's some sample code (note, this takes a while to run on large N): | |||

nodes=1000; | |||

ncalc=nodes*nodes; | |||

h=waitbar(0,'Starting'); | |||

PRUNEDM=zeros(nodes,nodes); | |||

%for each starting brain region | |||

ctr=0; | |||

for n=1:nodes | |||

for c=1:nodes | |||

x=squeeze(M(n,c,:)); %get path weight from region to category for all nets | |||

pd=fitdist(x,'Normal'); %fit to normal distribution | |||

ci=paramci(pd);%get 95%CI: ci(:,1) is CI for mu | |||

%if 95% CI crosses zero | |||

if(ci(1,1)<=0 && ci(2,1)>=0) | |||

PRUNEDM(n,c)=0; %set connection to zero | |||

else | |||

%otherwise set to the mean | |||

PRUNEDM(n,c)=pd.mu; | |||

end | |||

end | |||

ctr=ctr+nodes; | |||

perc=(ctr/ncalc)*100; | |||

waitbar(ctr/ncalc,h,sprintf('%.02f % complete',perc)); | |||

end | |||

=== Density-Based Thresholding === | |||

====Top N-ile ==== | |||

This was an old method used in one of the Sporns group's papers. If I recall correctly, the logic was something like: "10% of all possible brain connections exist, so we will retain the top 10%-ile of the weights (by magnitude), and set all other weights to zero". | |||

====Minimum Spanning Tree (MST)==== | |||

A graph-theoretic-based approach, this starts with an empty weight matrix, and adds weights according to an algorithm that I'll have to look up. MATLAB has a minspantree function requiring a Graph. You can create a graph from a vector, '''S''', of source nodes, '''T''' of target nodes, and a bidirectional (i.e., nonredunant) set of weights: | |||

S=[1 1 1 2 2 3]; | |||

T=[2 3 4 3 4 4]; | |||

w=[.5 .1 .2 .3 .4 .9]; | |||

G=graph(S,T,weights); | |||

[T,pred]=minspantree(G); | |||

'''NOTE:''' minimum spanning trees contain no cycles, by definition. This is going to be quite relevant for some of your network metrics (e.g., those that count triangles, which form a cycle), so consider this before using MST in your network pruning. | |||

Another Important thing to consider is that the weights in the MST graph represent distance. Distance is generally inversely proportional to strength, so if you have a weight matrix, '''W''', where big numbers represent strong connections, you probably want to create your graph using the inverse of your weights: | |||

G=graph(S,T, power(weights,-1); | |||

When you're starting off with an NxN adjacency matrix derived from time series correlations or extracted from an autoassociator network, there's some work to be done to translate that into the Graph object, '''G''' that the <code>minspantree</code> function expects. I have written a wrapper function, '''<code>minspan.m</code>''', that has solved all these problems for you. You can find in the Scripts/Matlab folder on UBFS. The function takes a 2-D adjacency matrix, '''ADJ''', as a parameter and will return the adjacency matrix representation, '''mst''', and other outputs of the minspantree() function, as well as the underlying graph object, '''G''': | |||

%suppose we have a matrix of '''timeseriesdata''' | |||

%compute all pairwise correlations to generate an NxN adjacency matrix, '''ADJ''' | |||

ADJ=corr(timeseriesdata); | |||

%produce the minimum spanning tree for this matrix | |||

ADJ=power(ADJ,-1); %calculate the reciprocal of the connection strengths so that strong weights are mapped to short distances | |||

%You may also need to eliminate or compute the absolute value of negative connections | |||

[mst, G, T, pred]=minspan(ADJ, 'method','sparse','root',1, 'type', 'forest'); | |||

The only required parameter is '''ADJ'''. The default minimum spanning tree produced uses the same parameters specified in the example above: it will be sparse, with the root node set to node 1, and returning a forest (i.e., multiple trees, if that's how the weights shake out). See the help for the MATLAB minspantree() function for details on what these options mean. | |||

====Minimum Connected Component==== | |||

A Minimum Connected Component is a subset of your weight matrix that retains the largest weights in descending order until every vertex is connected to at least one other vertex (at which point we stop adding weights). Algorithmically, this might take a while, if you go by the letter of the algorithm, but it turns out there's a trivial shortcut. Note that this code will not work properly if your connectivity matrix only includes the upper or lower-triangle. | |||

mcc=abs(weights); %absval because we care about magnitude | |||

mcc(eye(size(mcc,1))==1)=0; %remove self-connections | |||

%EASY BUTTON: | |||

mcc(mcc<min(max(mcc)))=0; %remove any connections smaller than that connecting the weakest-connected vertex | |||

%now have the weights with the largest magnitude that still allow all vertices to | |||

%remain connected | |||

isnegative=double(weights<0); %keep the +/- signs | |||

isnegative(isnegative==1)=-1; | |||

isnegative(isnegative==0)=1; | |||

mcc=mcc.*isnegative; %put back the - sign | |||

This code has been implemented in a function, '''<code>minconcomp.m</code>''', which you will find in the Scripts/Matlab folder on UBFS: | |||

mcc=minconcomp(ADJ) | |||

== Hierarchical Clustering == | == Hierarchical Clustering == | ||

Sarah Muldoon recommended starting off with a hierarchical clustering of the adjacency matrix in order to visualize the structure of the matrix and get a sense of the sort of structure that exists within the data. The [http://www.mathworks.com/help/stats/hierarchical-clustering.html MATLAB help page for hierarchical clustering] has some background information, but here are the highlights. | Sarah Muldoon recommended starting off with a hierarchical clustering of the adjacency matrix in order to visualize the structure of the matrix and get a sense of the sort of structure that exists within the data. The [http://www.mathworks.com/help/stats/hierarchical-clustering.html MATLAB help page for hierarchical clustering] has some background information, but here are the highlights. | ||

The first thing to note is that these steps are too computationally intensive for the adjacency matrices generated for the larger networks produced using the Lausanne 2008 parcellation (Lausanne 500 and 250 are too large). The myaparc_60 network | The first thing to note is that these steps are too computationally intensive for the adjacency matrices generated for the larger networks produced using the Lausanne 2008 parcellation (Lausanne 500 and 250 are too large). The myaparc_60 network is the largest parcellation produced by the Lausanne subparcellation scheme that can be processed using the Hierarchical Clustering method (i.e., the myaparc_125.annot and larger will not work). | ||

=== Obtain Linkage === | === Obtain Linkage === | ||

The first step of a hierarchical cluster is to obtain some kind of distance metric between each node in the graph. This information already exists in the form of the weighted adjacency matrices (either correlations or trained network weights). We pick up here at the linkage step. MATLAB has a <code>linkage</code> function that expects a vector of pairwise distances between all nodes, as would be generated by the <code>pdist</code> function. If you read the documentation for ''pdist'', you will find that it outputs a vector for all pairs ({2,1}, {3,1}, ... , { | The first step of a hierarchical cluster is to obtain some kind of distance metric between each node in the graph. This information already exists in the form of the weighted adjacency matrices (either correlations or trained network weights). We pick up here at the linkage step. MATLAB has a <code>linkage</code> function that expects a vector of pairwise distances between all nodes, as would be generated by the <code>pdist</code> function. If you read the documentation for ''pdist'', you will find that it outputs a vector for all pairs ({2,1}, {3,1}, ... , {n, 1}, {3, 2}, ..., {n, 2}, ... , {n, n-1}), where ''n'' is the number of nodes in the network. In relation to the adjacency matrices, these values would represent the lower (or upper) triangle of the matrix. | ||

==== Filter Weights ==== | ==== Filter Weights ==== | ||

The lower triangle of the adjacency matrix can be obtained in MATLAB using the <code>tril</code> function. An | The lower triangle of the adjacency matrix can be obtained in MATLAB using the <code>tril</code> function. An n×n (i.e., square) matrix has k diagonals, numbered 0 to (-1)n-1 for the lower triangle, and numbered 0 to n-1 for the upper triangle. The main diagonal, k=0, will be the diagonal containing all ones (in a correlation matrix), which are meaningless in this context. We will want to get all the diagonals where k<0 (if you are getting the upper triangle using the <code>triu</code> function, use k>0). | ||

mask=tril(ones(size(M)),-1); %identify the elements in the lower triangle, excluding diagonal k=0 | mask=tril(ones(size(M)),-1); %identify the elements in the lower triangle, excluding diagonal k=0 | ||

lowert=M(find(mask)); %use mask to obtain the values. | lowert=M(find(mask)); %use mask to obtain the values. | ||

| Line 57: | Line 137: | ||

The first thing to note is that the genlouvain function doesn't work directly on the adjacency matrix, but instead on a modularity matrix, which is ''derived from'' your adjacency matrix. The number of partitions that are detected is influenced in part by a parameter, gamma, that is often omitted when this modularity matrix is calculated. When omitted, it defaults to 1, however larger gammas permit a greater number of final partitions. The following code sample is pulled from the genlouvain wiki site. The MATLAB function, <code>full</code>, transforms a sparse matrix into a full matrix. Sparse matrices are a more compact way of storing large data sets when there are a large number of empty values. I'm not clear whether the code works properly if the adjacency matrix, '''''A''''', starts off as a full matrix, so instead I will begin by transforming the full matrix '''''M''''' (e.g., a 1000 x 1000 network) into a sparse matrix data type and proceed from there. | The first thing to note is that the genlouvain function doesn't work directly on the adjacency matrix, but instead on a modularity matrix, which is ''derived from'' your adjacency matrix. The number of partitions that are detected is influenced in part by a parameter, gamma, that is often omitted when this modularity matrix is calculated. When omitted, it defaults to 1, however larger gammas permit a greater number of final partitions. The following code sample is pulled from the genlouvain wiki site. The MATLAB function, <code>full</code>, transforms a sparse matrix into a full matrix. Sparse matrices are a more compact way of storing large data sets when there are a large number of empty values. I'm not clear whether the code works properly if the adjacency matrix, '''''A''''', starts off as a full matrix, so instead I will begin by transforming the full matrix '''''M''''' (e.g., a 1000 x 1000 network) into a sparse matrix data type and proceed from there. | ||

A=sparse(M); | A=sparse(M); | ||

gamma = 1. | gamma = 1.15; %gamma=1 tends to produce 3-community solutions. Higher values allow more communities. | ||

k = full(sum(A)); | k = full(sum(A)); | ||

twom = sum(k); %twom ("2m") is a normalization parameter | twom = sum(k); %twom ("2m") is a normalization parameter | ||

B = full(A - gamma*k'*k/twom); %B is the modularity matrix | B = full(A - gamma*k'*k/twom); %B is the modularity matrix | ||

As for choice of ''gamma'': how many communities is the right number? Unfortunately, that's another arbitrary decision. However, if you want to anchor your decision to something, [[https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3174820/ Yeo et al.]] settled on 7 and 17 communities, so you could play around with gamma until you get a 7 community solution. | |||

=== Detect Communities Using <code>genlouvain</code> === | === Detect Communities Using <code>genlouvain</code> === | ||

| Line 67: | Line 149: | ||

Q = Q/twom | Q = Q/twom | ||

Because this algorithm is stochastic, repeated applications of the genlouvain function are likely to generate somewhat different partitionings. Fortunately, the code runs rather quickly on relatively small networks, and so we can run the function repeatedly and store each partitioning. | Because this algorithm is stochastic, repeated applications of the genlouvain function are likely to generate somewhat different partitionings. Fortunately, the code runs rather quickly on relatively small networks, and so we can run the function repeatedly and store each partitioning. | ||

maxiterations= | maxiterations=100; %how many partitioning attempts do you want? | ||

S=repmat(NaN, maxiterations, length(k)); %preallocate storage for each partitioning solution | S=repmat(NaN, maxiterations, length(k)); %preallocate storage for each partitioning solution | ||

Q=repmat(NaN, maxiterations, 1); %preallocate storage for the modularity of each partitioning solution | Q=repmat(NaN, maxiterations, 1); %preallocate storage for the modularity of each partitioning solution | ||

| Line 82: | Line 164: | ||

'''''S2''''' is a matrix of <code>size(S)</code>. <code>mode(S2)</code> can be used to list the representative partition for each node in the adjacency matrix, '''''M'''''. Most, if not all, rows of S2 will be the same, and <code>mode(S2)</code> will pick out the partitioning of '''''S2''''' with the most votes. I haven't looked too deeply into the guts and math behind the code, but presumably the consistency among rows of S2 matrix will be reflected in ''qpc'' -- the quality of the consensus, where smaller values are better. | '''''S2''''' is a matrix of <code>size(S)</code>. <code>mode(S2)</code> can be used to list the representative partition for each node in the adjacency matrix, '''''M'''''. Most, if not all, rows of S2 will be the same, and <code>mode(S2)</code> will pick out the partitioning of '''''S2''''' with the most votes. I haven't looked too deeply into the guts and math behind the code, but presumably the consistency among rows of S2 matrix will be reflected in ''qpc'' -- the quality of the consensus, where smaller values are better. | ||

If you are working with multiple community partitionings of related networks (e.g., one network per run from the same participant within- or across-sessions), you might find it interesting to compare the consensus best partitionings to get a sense of the stability of these community assignments: | If you are working with multiple community partitionings of related networks (e.g., one network per run from the same participant within- or across-sessions), you might find it interesting to compare the consensus best partitionings to get a sense of the stability of these community assignments. The example below shows the cbp for four networks generated from four runs of the same experiment (each column represents the community assignment for each node within one of the networks, and each row represents the assignment for a given node in each of the four networks): | ||

>> cbpart=squeeze(mode(S2)) | >> cbpart=squeeze(mode(S2)) | ||

| Line 95: | Line 177: | ||

... etc. | ... etc. | ||

There may well already be some analytic procedure that has been proposed and/or applied, and if one comes to light, it'll be described here. Until then, however, bear in mind that the community assignments are independent across the communities. For example, in the first line of the example output above, the first region was assigned to community '1' in all 4 adjacency matrices. However it is important to realize that community '1' in the first network may comprise an | There may well already be some analytic procedure that has been proposed and/or applied, and if one comes to light, it'll be described here. Until then, however, bear in mind that the community assignments are independent across the communities. For example, in the first line of the example output above, the first region was assigned to community '1' in all 4 adjacency matrices. However it is important to realize that community '1' in the first network may comprise an mostly different set of regions than community '1' in the second network. I expect that this potential discrepancy becomes more problematic with a greater number of discovered communities. Rather than look at what communities are assigned to each region, the correct thing to do is to look at what regions are assigned to each community. It is possible, for example, that community 1 in network A comprises the same regions as those belonging to community 2 in network B -- in this case, membership is perfectly consistent between the two networks for this particular community; it's merely the case that the community is labeled '1', in network A, and '2' in network B. That said, I've not yet seen a cbpart matrix where the first row wasn't all ones. Curious... | ||

=== Visualize Communities === | === Visualize Communities === | ||

At this point you will have decided on the community assignments for each region in your adjacency matrix '''''M'''''. Let us suppose you have used the consensus best partitioning for a given network, and are storing these assignments in '''''cbpart'''''. At this point, if you had dropped any regions from your network, you will need to re-insert a placeholder value for those regions in '''''cbpart''''': | At this point you will have decided on the community assignments for each region in your adjacency matrix '''''M'''''. Let us suppose you have used the consensus best partitioning for a given network, and are storing these assignments in '''''cbpart'''''. At this point, if you had dropped any regions from your network, you will need to re-insert a placeholder value for those regions in '''''cbpart''''': | ||

% Assuming clustering was done on an adjacency matrix containing both hemispheres, | % Assuming clustering was done on an adjacency matrix containing both hemispheres, | ||

% you will have to first split partition vector so that you're only operating on the lh or rh regions | % you will have to first split partition vector so that | ||

% The next line is | % you're only operating on the lh or rh regions | ||

% The next line is for the myaparc_60 .annot file, which had 59 entries per hemi | |||

% and dropped 2 regions (''unknown'' and ''corpuscallosum'') in each hemi | % and dropped 2 regions (''unknown'' and ''corpuscallosum'') in each hemi | ||

% This leaves 57 per hemi: | % This leaves 57 per hemi: | ||

| Line 107: | Line 190: | ||

% originally dropped regions 1 and 5 from the adjacency matrix | % originally dropped regions 1 and 5 from the adjacency matrix | ||

% we now have to insert placeholder values (cluster=0) for those two regions | % we now have to insert placeholder values (cluster=0) for those two regions | ||

cbpart_lh=[0 | cbpart_lh=[0 cbpart_lh(1:3) 0 cbpart_lh(4:end)]; | ||

%the resulting vector | %the resulting vector has a 0 inserted at elements 1 and 5 and is 2 elements larger | ||

%Now cbpart_lh has 59 entries, which matches the number of entries in the | |||

%original set of regions returned by <code>parseFSSegments.m</code> | |||

%see also [http://stackoverflow.com/questions/27563001/inserting-multiple-rows-into-matrix-shifting-existing-rows this link] | %see also [http://stackoverflow.com/questions/27563001/inserting-multiple-rows-into-matrix-shifting-existing-rows this link] | ||

====Rendering in BrainNet Viewer==== | |||

This option is getting promoted because it is centered around the concept of the brain as a network, which is reflected in how the data is visualized. Your first task here is going to be to generate .node (and possibly .edge) files. | |||

=====Creating .node Files===== | |||

If you haven't already, you will need the XYZ coordinates for each of your labels. If you're working with the 1000 node Lausanne parcellation, I've already computed these and saved them in ''regionlabels.mat'', which you can find in the ubfs/Scripts/Matlab folder. You will also need a way to map each node in your adjacency matrix on to the associated label. If you're working with the full adjacency matrix, then the mapping is trivial: node 1 in your adjacency matrix corresponds to label 1 in the regionlabels matrix. | |||

If, however, you've simplified your network (e.g., dropped regions that didn't show significant task activation), things get a little more complicated. You will need to figure out for example that node 1 in your adjacency matrix is <code>lh.superiorfrontal_27</code>, which is actually entry 398 in the lausanne labels, because that's the only way you're going to figure out the correct X, Y, Z coordinates for the nodes in your reduced network. | |||

This is documented elsewhere, but repeated here: If you have two sets of cell arrays (e.g., '''lausanne_labels''' and '''cluster_labels'''), and you want to find out which elements in lausanne_labels map to the elements in cluster_labels, use the <code>ismember</code> MATLAB function: | |||

'''r'''=find(ismember(lausanne_labels, cluster_labels)); | |||

%this gives the indices of the elements in lausanne_labels | |||

%that overlap with those in cluster_labels | |||

%You can inspect for yourself by calling: | |||

%(labels(r)) | |||

M_reduced=M(r,r); %this selects the subset of M corresponding to the overlapping node indices | |||

%note that they will now also be sorted in the same order as they appear in '''r''' | |||

%This means that the first region in '''M_reduced''' will probably not be the same | |||

%as the first region in '''cluster_labels''' | |||

If we analyzed communities within '''M_reduced''' in the context of the Lausanne parcellation, then the relevant node information would be in XYZ(r), and labels(r). Here's a snippet of code that will generate a .node file: | |||

%Community assignments for nodes are stored in cbpart | |||

nodefileptr=fopen('ReducedNet.node','w'); | |||

for node=1:length(r) | |||

thisxyz=XYZ(r(node),:); %get the coordinates for the node | |||

thislabel=labels{r(node)}; %get the node label | |||

thiscomm=cbpart(node); %get the community assignment | |||

%build the string to print to the node file | |||

%format: X Y Z category size name | |||

outstring=sprintf('%.2f\t%.2f\t%.2f\t%.2f\t%.2f\t%s\n', ... | |||

thisxyz(1), thisxyz(2), thisxyz(3), ... | |||

thiscomm, 1 , thislabel); | |||

fprintf(nodefileptr, outstring); | |||

end | |||

fclose(nodefileptr); | |||

=====Creating .edge Files===== | |||

This is trivially easy, since BrainNet Viewer .edge files are just tab-delimited text files describing the adjacency matrix: | |||

dlmwrite('ReducedNet.edge', '''M_reduced''', 'delimiter', '\t', 'precision', 3); | |||

==== Rendering in FreeSurfer ==== | |||

Your main task here is to generate a new CTAB file with the new rgb color assignments for each of the regions appearing in the .annot file (this is why we had to insert placeholder values for any dropped regions: If no entry if provided for these regions, this can be a problem). A MATLAB utility can be found in the ubfs Scripts/Matlab folder, called <code>generateCTAB.m</code>. When added to your Matlab path, you can pass this function your vector of community assignments and your region names. Be sure to read the help for this function, as there are a few other useful options, as well as the stipulation that the segID for the regions start at 0 and end at n-1 -- if this is not the case for your .annot file then you will have to provide a vector of segID values. | |||

generateCTAB('classes', cbpart_lh, 'regions', lh_regions, 'prefix', 'lh_myaparc_60_clustered'); | generateCTAB('classes', cbpart_lh, 'regions', lh_regions, 'prefix', 'lh_myaparc_60_clustered'); | ||

After generating new color tables for each hemisphere, you will have 2 new .annot.ctab files (''?h_myaparc_60_clustered.annot.ctab'') in your current directory. The next step uses a hand MATLAB function called replace_ctab.m, written by [http://brainder.org Anderson Winkler] (found in the ubfs Scripts/Matlab folder). | After generating new color tables for each hemisphere, you will have 2 new .annot.ctab files (''?h_myaparc_60_clustered.annot.ctab'') in your current directory. The next step uses a hand MATLAB function called replace_ctab.m, written by [http://brainder.org Anderson Winkler] (found in the ubfs Scripts/Matlab folder). | ||

%assuming that the SUBJECTS_DIR environment variable is visible | |||

subjects_dir=getenv('SUBJECTS_DIR'); | |||

subject_id='FS_T1_501'; | |||

labeldir=[subjects_dir filesep subject_id filesep 'label']; | |||

source_annot=[labeldir filesep 'lh.myaparc_60.annot']; | |||

new_ctab=[lh_myaparc_60_clustered.annot.ctab']; | |||

new_annot=[labeldir filesep ... | |||

'lh.myaparc_60_clust.annot']; | |||

replace_ctab( source_annot, new_ctab, new_annot ); | |||

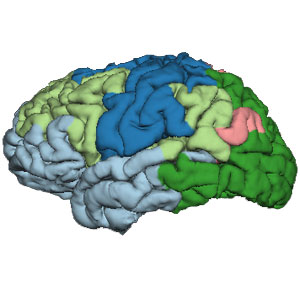

The communities within your network are easily visualized using your newly created .annot file in Freesurfer. From your terminal window: | |||

SUBJECT_ID=FS_T1_501 | |||

LABELDIR=${SUBJECTS_DIR}/${SUBJECT_ID}/label | |||

tksurfer ${SUBJECT_ID} lh pial -annot ${LABELDIR}/lh.myaparc_60_clust.annot | |||

[[File:Surface_Clusters.jpg]] | |||

[[Category: Networks]] | [[Category: Networks]] | ||

[[Category: Time Series]] | [[Category: Time Series]] | ||

Latest revision as of 17:42, 5 November 2020

Community (or cluster) detection is procedure by which subnetworks are identified within the overall network. The subnetworks are cliques/clusters/communities of nodes that generally coactivate together. This page assumes that you have managed to get yourself a n×n adjacency matrix, M, through hard work or thievery, and wish to identify the communities/subnetworks/cliques that might be lurking within.

Making Matrices Symmetrical

Some algorithms assume bidirectional weights (i.e., w_ij = w_ji), and will be expecting a symmetrical adjacency matrix. If this is not the case, this MATLAB command will set a bidirectional weight between any two nodes in M to the average of the directional weights:

M_symmetrical = (M + M.')/2

Binarizing/Thresholding Weight Matrices

Regardless of how you compute a connection (correlation, mutual information, neural network), you're generally going to have some value for each connection (i.e., every node is connected; it's just a matter of degree). The choice of how you decide which weights are "real" will impact the results of subsequent analyses, and you should be prepared for reviewers to ask you to rationalize your decisions:

Statistical Threshold

Based on Pearson Correlation Values

If you used correlations, you may have a p-value associated with each connection. You may choose to keep all weights with a p-value exceeding some threshold (e.g., p<.0001, corrected for N*N comparisons, so often your threshold will be really really small). This approach is just as simple as it is arbitrary, so you might expect some flak.

Based on Statistical Distribution Parameters

If you have a distribution of values for each weight (e.g., adjacency matrices from multiple individuals, or from multiple models), you might use something like a confidence interval to eliminate connections that have a distribution of weights that cross the zero line in the 95% CI because they are neither positive nor negative with sufficient reliability. Here's some sample code (note, this takes a while to run on large N):

nodes=1000;

ncalc=nodes*nodes;

h=waitbar(0,'Starting');

PRUNEDM=zeros(nodes,nodes);

%for each starting brain region

ctr=0;

for n=1:nodes

for c=1:nodes

x=squeeze(M(n,c,:)); %get path weight from region to category for all nets

pd=fitdist(x,'Normal'); %fit to normal distribution

ci=paramci(pd);%get 95%CI: ci(:,1) is CI for mu

%if 95% CI crosses zero

if(ci(1,1)<=0 && ci(2,1)>=0)

PRUNEDM(n,c)=0; %set connection to zero

else

%otherwise set to the mean

PRUNEDM(n,c)=pd.mu;

end

end

ctr=ctr+nodes;

perc=(ctr/ncalc)*100;

waitbar(ctr/ncalc,h,sprintf('%.02f % complete',perc));

end

Density-Based Thresholding

Top N-ile

This was an old method used in one of the Sporns group's papers. If I recall correctly, the logic was something like: "10% of all possible brain connections exist, so we will retain the top 10%-ile of the weights (by magnitude), and set all other weights to zero".

Minimum Spanning Tree (MST)

A graph-theoretic-based approach, this starts with an empty weight matrix, and adds weights according to an algorithm that I'll have to look up. MATLAB has a minspantree function requiring a Graph. You can create a graph from a vector, S, of source nodes, T of target nodes, and a bidirectional (i.e., nonredunant) set of weights:

S=[1 1 1 2 2 3]; T=[2 3 4 3 4 4]; w=[.5 .1 .2 .3 .4 .9]; G=graph(S,T,weights); [T,pred]=minspantree(G);

NOTE: minimum spanning trees contain no cycles, by definition. This is going to be quite relevant for some of your network metrics (e.g., those that count triangles, which form a cycle), so consider this before using MST in your network pruning.

Another Important thing to consider is that the weights in the MST graph represent distance. Distance is generally inversely proportional to strength, so if you have a weight matrix, W, where big numbers represent strong connections, you probably want to create your graph using the inverse of your weights:

G=graph(S,T, power(weights,-1);

When you're starting off with an NxN adjacency matrix derived from time series correlations or extracted from an autoassociator network, there's some work to be done to translate that into the Graph object, G that the minspantree function expects. I have written a wrapper function, minspan.m, that has solved all these problems for you. You can find in the Scripts/Matlab folder on UBFS. The function takes a 2-D adjacency matrix, ADJ, as a parameter and will return the adjacency matrix representation, mst, and other outputs of the minspantree() function, as well as the underlying graph object, G:

%suppose we have a matrix of timeseriesdata %compute all pairwise correlations to generate an NxN adjacency matrix, ADJ ADJ=corr(timeseriesdata); %produce the minimum spanning tree for this matrix ADJ=power(ADJ,-1); %calculate the reciprocal of the connection strengths so that strong weights are mapped to short distances %You may also need to eliminate or compute the absolute value of negative connections [mst, G, T, pred]=minspan(ADJ, 'method','sparse','root',1, 'type', 'forest');

The only required parameter is ADJ. The default minimum spanning tree produced uses the same parameters specified in the example above: it will be sparse, with the root node set to node 1, and returning a forest (i.e., multiple trees, if that's how the weights shake out). See the help for the MATLAB minspantree() function for details on what these options mean.

Minimum Connected Component

A Minimum Connected Component is a subset of your weight matrix that retains the largest weights in descending order until every vertex is connected to at least one other vertex (at which point we stop adding weights). Algorithmically, this might take a while, if you go by the letter of the algorithm, but it turns out there's a trivial shortcut. Note that this code will not work properly if your connectivity matrix only includes the upper or lower-triangle.

mcc=abs(weights); %absval because we care about magnitude mcc(eye(size(mcc,1))==1)=0; %remove self-connections %EASY BUTTON: mcc(mcc<min(max(mcc)))=0; %remove any connections smaller than that connecting the weakest-connected vertex %now have the weights with the largest magnitude that still allow all vertices to %remain connected isnegative=double(weights<0); %keep the +/- signs isnegative(isnegative==1)=-1; isnegative(isnegative==0)=1; mcc=mcc.*isnegative; %put back the - sign

This code has been implemented in a function, minconcomp.m, which you will find in the Scripts/Matlab folder on UBFS:

mcc=minconcomp(ADJ)

Hierarchical Clustering

Sarah Muldoon recommended starting off with a hierarchical clustering of the adjacency matrix in order to visualize the structure of the matrix and get a sense of the sort of structure that exists within the data. The MATLAB help page for hierarchical clustering has some background information, but here are the highlights.

The first thing to note is that these steps are too computationally intensive for the adjacency matrices generated for the larger networks produced using the Lausanne 2008 parcellation (Lausanne 500 and 250 are too large). The myaparc_60 network is the largest parcellation produced by the Lausanne subparcellation scheme that can be processed using the Hierarchical Clustering method (i.e., the myaparc_125.annot and larger will not work).

Obtain Linkage

The first step of a hierarchical cluster is to obtain some kind of distance metric between each node in the graph. This information already exists in the form of the weighted adjacency matrices (either correlations or trained network weights). We pick up here at the linkage step. MATLAB has a linkage function that expects a vector of pairwise distances between all nodes, as would be generated by the pdist function. If you read the documentation for pdist, you will find that it outputs a vector for all pairs ({2,1}, {3,1}, ... , {n, 1}, {3, 2}, ..., {n, 2}, ... , {n, n-1}), where n is the number of nodes in the network. In relation to the adjacency matrices, these values would represent the lower (or upper) triangle of the matrix.

Filter Weights

The lower triangle of the adjacency matrix can be obtained in MATLAB using the tril function. An n×n (i.e., square) matrix has k diagonals, numbered 0 to (-1)n-1 for the lower triangle, and numbered 0 to n-1 for the upper triangle. The main diagonal, k=0, will be the diagonal containing all ones (in a correlation matrix), which are meaningless in this context. We will want to get all the diagonals where k<0 (if you are getting the upper triangle using the triu function, use k>0).

mask=tril(ones(size(M)),-1); %identify the elements in the lower triangle, excluding diagonal k=0 lowert=M(find(mask)); %use mask to obtain the values.

The above code returns the values from the lower triangle of the adjacency matrix M as a vector in the same order that pdist generates.

Run linkage

There are several methods for running the linkage function, but common choices are single, complete, and average.

Z=linkage(lowert,'average');

Note that the solutions provided by each of these methods can yield drastically different results. For example, while I was working through this workflow for the first time, I used the single linkage distance calculation method. The sorted correlation matrix displayed at the end didn't seem to have any well-defined clusters of any size (leading me to assume that I had done something wrong). Since there doesn't seem to be any "correct" method, and the purpose of this exercise is to identify some sort of underlying structure to the weights, you might try different methods until you find something that makes that structure apparent.

Generate the Dendrogram

Using the dendrogram function, you'll need to force it to provide the entire leaf structure, specifying p, the maximum number of leaf nodes (if not specified, the default behavior is to display up to 30 leaf nodes):

[H, T, outperm] = dendrogram(Z, size(M,1));

Looking at the dendrogram can be used to inform the number of clusters you might look for in a data set (e.g., if something like k-means clustering was subsequently employed).

Sort and Visualize Adjacency Matrices

Outperm vector

Use the outperm vector generated by the dendrogram as a sorting index for the adjacency matrix to reveal the network clustering structure.

sorted_M=M(outperm,outperm); figure(2); imagesc(sorted_M);

Simulated Annealing

This sounds fancy and tricky, but it's easily and quickly applied using the reorder_matrix.m function appearing in the Rubinov & Sporns (2010) Brain Connectivity Toolbox, which can be found in the ubfs Scripts/Matlab/BCT folder (file archive downloaded from the website, in the file BCT.zip).

[sorted_M, sortorder,cost]=reorder_matrix(M, 'line', 0); figure(3); imagesc(sorted_M);

In truth, the matrices generated by the simulated annealing method have more clearly visible modules than those generated by applying the outperm vector. I'm not sure why that would be, as the outperm vectors are a pretty transparent way of grouping related nodes together (unless the method described above has an error in it!).

Visualize Multiple Adjacency Matrices

Suppose you have several sorted adjacency matrices that you would like to display side-by-side. Use the subplot function to set up a grid-like organization of your figure:

subplot(2,2,1); %indexing within a 2x2 grid, the next thing you will be displaying will go in slot 1, which is the 1st row, 1st column imagesc(sorted_run1); subplot(2,2,2); %still in the 2x2 grid, the next displayed item goes into slot 2; slots increment by column within a row, until end of row is reached imagesc(sorted_run2); subplot(2,2,3); %3rd slot is the first column of the second row imagesc(sorted_run3); subplot(2,2,4); %4th slot is the 2nd column of the 2nd row imagesc(sorted_run4);

This might be obvious to you, but when calling subplot(a,b,k) to visualize matrix K out of n total matrices, make sure that a × b ≥ n

Stochastic Clustering

A powerful and widely-used clustering algorithm is the Louvain greedy clustering algorithm. It's stochastic (i.e., contains a random element to it) because it's computationally intractable to completely search the entire space of possible clusterings for anything other than small "toy" networks.

The Louvain algorithm is described here, and has been incorporated in a collection of MATLAB network analysis scripts archived here and here but also stored in our ubfs Scripts/Matlab folder.

Create a Modularity Matrix

The first thing to note is that the genlouvain function doesn't work directly on the adjacency matrix, but instead on a modularity matrix, which is derived from your adjacency matrix. The number of partitions that are detected is influenced in part by a parameter, gamma, that is often omitted when this modularity matrix is calculated. When omitted, it defaults to 1, however larger gammas permit a greater number of final partitions. The following code sample is pulled from the genlouvain wiki site. The MATLAB function, full, transforms a sparse matrix into a full matrix. Sparse matrices are a more compact way of storing large data sets when there are a large number of empty values. I'm not clear whether the code works properly if the adjacency matrix, A, starts off as a full matrix, so instead I will begin by transforming the full matrix M (e.g., a 1000 x 1000 network) into a sparse matrix data type and proceed from there.

A=sparse(M);

gamma = 1.15; %gamma=1 tends to produce 3-community solutions. Higher values allow more communities.

k = full(sum(A));

twom = sum(k); %twom ("2m") is a normalization parameter

B = full(A - gamma*k'*k/twom); %B is the modularity matrix

As for choice of gamma: how many communities is the right number? Unfortunately, that's another arbitrary decision. However, if you want to anchor your decision to something, [Yeo et al.] settled on 7 and 17 communities, so you could play around with gamma until you get a 7 community solution.

Detect Communities Using genlouvain

At this point, you now have B, the modularity matrix for the adjacency matrix M. Running the genlouvain function on B will stochastically generate a partitioning of your network that maximizes modularity (within its search space).

[S,Q] = genlouvain(B); Q = Q/twom

Because this algorithm is stochastic, repeated applications of the genlouvain function are likely to generate somewhat different partitionings. Fortunately, the code runs rather quickly on relatively small networks, and so we can run the function repeatedly and store each partitioning.

maxiterations=100; %how many partitioning attempts do you want? S=repmat(NaN, maxiterations, length(k)); %preallocate storage for each partitioning solution Q=repmat(NaN, maxiterations, 1); %preallocate storage for the modularity of each partitioning solution for i=1:maxiterations [s, q]=genlouvain(B); q=q/twom; S(i,:)=s(:); Q(i)=q; end

Determine the Consensus Best Partitioning

With maxiterations different partitionings of your adjacency matrix into different communities (stored in matrix S in the previous step), we can ascertain the consensus community assignment (i.e., the modal partitioning) for each node in the network. The commdetect toolbox has a function, consensus_iterative for that task.

[S2 Q2 X_new3 qpc] = consensus_iterative(S);

S2 is a matrix of size(S). mode(S2) can be used to list the representative partition for each node in the adjacency matrix, M. Most, if not all, rows of S2 will be the same, and mode(S2) will pick out the partitioning of S2 with the most votes. I haven't looked too deeply into the guts and math behind the code, but presumably the consistency among rows of S2 matrix will be reflected in qpc -- the quality of the consensus, where smaller values are better.

If you are working with multiple community partitionings of related networks (e.g., one network per run from the same participant within- or across-sessions), you might find it interesting to compare the consensus best partitionings to get a sense of the stability of these community assignments. The example below shows the cbp for four networks generated from four runs of the same experiment (each column represents the community assignment for each node within one of the networks, and each row represents the assignment for a given node in each of the four networks):

>> cbpart=squeeze(mode(S2))

cbpart =

1 1 1 1

2 2 2 2

3 2 3 3

4 3 4 4

1 1 5 1

4 3 4 4

... etc.

There may well already be some analytic procedure that has been proposed and/or applied, and if one comes to light, it'll be described here. Until then, however, bear in mind that the community assignments are independent across the communities. For example, in the first line of the example output above, the first region was assigned to community '1' in all 4 adjacency matrices. However it is important to realize that community '1' in the first network may comprise an mostly different set of regions than community '1' in the second network. I expect that this potential discrepancy becomes more problematic with a greater number of discovered communities. Rather than look at what communities are assigned to each region, the correct thing to do is to look at what regions are assigned to each community. It is possible, for example, that community 1 in network A comprises the same regions as those belonging to community 2 in network B -- in this case, membership is perfectly consistent between the two networks for this particular community; it's merely the case that the community is labeled '1', in network A, and '2' in network B. That said, I've not yet seen a cbpart matrix where the first row wasn't all ones. Curious...

Visualize Communities

At this point you will have decided on the community assignments for each region in your adjacency matrix M. Let us suppose you have used the consensus best partitioning for a given network, and are storing these assignments in cbpart. At this point, if you had dropped any regions from your network, you will need to re-insert a placeholder value for those regions in cbpart:

% Assuming clustering was done on an adjacency matrix containing both hemispheres,

% you will have to first split partition vector so that

% you're only operating on the lh or rh regions

% The next line is for the myaparc_60 .annot file, which had 59 entries per hemi

% and dropped 2 regions (unknown and corpuscallosum) in each hemi

% This leaves 57 per hemi:

cbpart_lh=cpbart(1:57);

% originally dropped regions 1 and 5 from the adjacency matrix

% we now have to insert placeholder values (cluster=0) for those two regions

cbpart_lh=[0 cbpart_lh(1:3) 0 cbpart_lh(4:end)];

%the resulting vector has a 0 inserted at elements 1 and 5 and is 2 elements larger

%Now cbpart_lh has 59 entries, which matches the number of entries in the

%original set of regions returned by parseFSSegments.m

%see also this link

Rendering in BrainNet Viewer

This option is getting promoted because it is centered around the concept of the brain as a network, which is reflected in how the data is visualized. Your first task here is going to be to generate .node (and possibly .edge) files.

Creating .node Files

If you haven't already, you will need the XYZ coordinates for each of your labels. If you're working with the 1000 node Lausanne parcellation, I've already computed these and saved them in regionlabels.mat, which you can find in the ubfs/Scripts/Matlab folder. You will also need a way to map each node in your adjacency matrix on to the associated label. If you're working with the full adjacency matrix, then the mapping is trivial: node 1 in your adjacency matrix corresponds to label 1 in the regionlabels matrix.

If, however, you've simplified your network (e.g., dropped regions that didn't show significant task activation), things get a little more complicated. You will need to figure out for example that node 1 in your adjacency matrix is lh.superiorfrontal_27, which is actually entry 398 in the lausanne labels, because that's the only way you're going to figure out the correct X, Y, Z coordinates for the nodes in your reduced network.

This is documented elsewhere, but repeated here: If you have two sets of cell arrays (e.g., lausanne_labels and cluster_labels), and you want to find out which elements in lausanne_labels map to the elements in cluster_labels, use the ismember MATLAB function:

r=find(ismember(lausanne_labels, cluster_labels)); %this gives the indices of the elements in lausanne_labels %that overlap with those in cluster_labels %You can inspect for yourself by calling: %(labels(r)) M_reduced=M(r,r); %this selects the subset of M corresponding to the overlapping node indices %note that they will now also be sorted in the same order as they appear in r %This means that the first region in M_reduced will probably not be the same %as the first region in cluster_labels

If we analyzed communities within M_reduced in the context of the Lausanne parcellation, then the relevant node information would be in XYZ(r), and labels(r). Here's a snippet of code that will generate a .node file:

%Community assignments for nodes are stored in cbpart

nodefileptr=fopen('ReducedNet.node','w');

for node=1:length(r)

thisxyz=XYZ(r(node),:); %get the coordinates for the node

thislabel=labels{r(node)}; %get the node label

thiscomm=cbpart(node); %get the community assignment

%build the string to print to the node file

%format: X Y Z category size name

outstring=sprintf('%.2f\t%.2f\t%.2f\t%.2f\t%.2f\t%s\n', ...

thisxyz(1), thisxyz(2), thisxyz(3), ...

thiscomm, 1 , thislabel);

fprintf(nodefileptr, outstring);

end

fclose(nodefileptr);

Creating .edge Files

This is trivially easy, since BrainNet Viewer .edge files are just tab-delimited text files describing the adjacency matrix:

dlmwrite('ReducedNet.edge', M_reduced, 'delimiter', '\t', 'precision', 3);

Rendering in FreeSurfer

Your main task here is to generate a new CTAB file with the new rgb color assignments for each of the regions appearing in the .annot file (this is why we had to insert placeholder values for any dropped regions: If no entry if provided for these regions, this can be a problem). A MATLAB utility can be found in the ubfs Scripts/Matlab folder, called generateCTAB.m. When added to your Matlab path, you can pass this function your vector of community assignments and your region names. Be sure to read the help for this function, as there are a few other useful options, as well as the stipulation that the segID for the regions start at 0 and end at n-1 -- if this is not the case for your .annot file then you will have to provide a vector of segID values.

generateCTAB('classes', cbpart_lh, 'regions', lh_regions, 'prefix', 'lh_myaparc_60_clustered');

After generating new color tables for each hemisphere, you will have 2 new .annot.ctab files (?h_myaparc_60_clustered.annot.ctab) in your current directory. The next step uses a hand MATLAB function called replace_ctab.m, written by Anderson Winkler (found in the ubfs Scripts/Matlab folder).

%assuming that the SUBJECTS_DIR environment variable is visible

subjects_dir=getenv('SUBJECTS_DIR');

subject_id='FS_T1_501';

labeldir=[subjects_dir filesep subject_id filesep 'label'];

source_annot=[labeldir filesep 'lh.myaparc_60.annot'];

new_ctab=[lh_myaparc_60_clustered.annot.ctab'];

new_annot=[labeldir filesep ...

'lh.myaparc_60_clust.annot'];

replace_ctab( source_annot, new_ctab, new_annot );

The communities within your network are easily visualized using your newly created .annot file in Freesurfer. From your terminal window:

SUBJECT_ID=FS_T1_501

LABELDIR=${SUBJECTS_DIR}/${SUBJECT_ID}/label

tksurfer ${SUBJECT_ID} lh pial -annot ${LABELDIR}/lh.myaparc_60_clust.annot